Threat or Theory: How realistic are “Dolphin Attacks” in voice controlled systems?

Are inaudible Voice Commands, so called “Dolphin Attacks”, a serious threat for voice controlled smart devices? A team of Chinese researchers published a paper explaining how they used ultrasonic commands, inaudible for the human ear, to take over the control of popular devices such as Amazon Alexa, Google Now or Siri from Apple.

As more devices are being connected to the Internet of Things, as more we hear about security flaws being used to carry out large scale attacks on computer systems. Some already speak about IoT as the “Internet of Trash” rather than the “Internet of Things”. But once more, it’s probably not the IoT that is to blame, but the design of the voice recognition devices connected to it. So called “Dolphin Attacks”, ultrasonic inaudible voice commands, make these devices vulnerable.

Speech Recognition becomes omnipresent in our everyday life

As Speech Recognition has incredibly improved over the past years, Voice Controlled Systems (VCS) are becoming increasingly popular. They appeared in greater scale in cars a few years ago, where drivers could control the radio, nagivation and other systems without taking their eye off the road or their hands off the steering wheel. Any smartphone can be voice-controlled either with Siri (Apple), Google Now (Android) or Cortana (Microsoft). With home assistants such as Amazon Alexa or Google Home, Speech Recognition finally celebrates a triumphal entry from the road to the smartphone to our homes. And the latter use a so called “always on” feature, meaning their microphones are always open, listening for possible commands.

But how is it possible to make them react to inaudible voices? While the human ear is usually incapable of hearing frequencies above 20 kHz, microphones can.

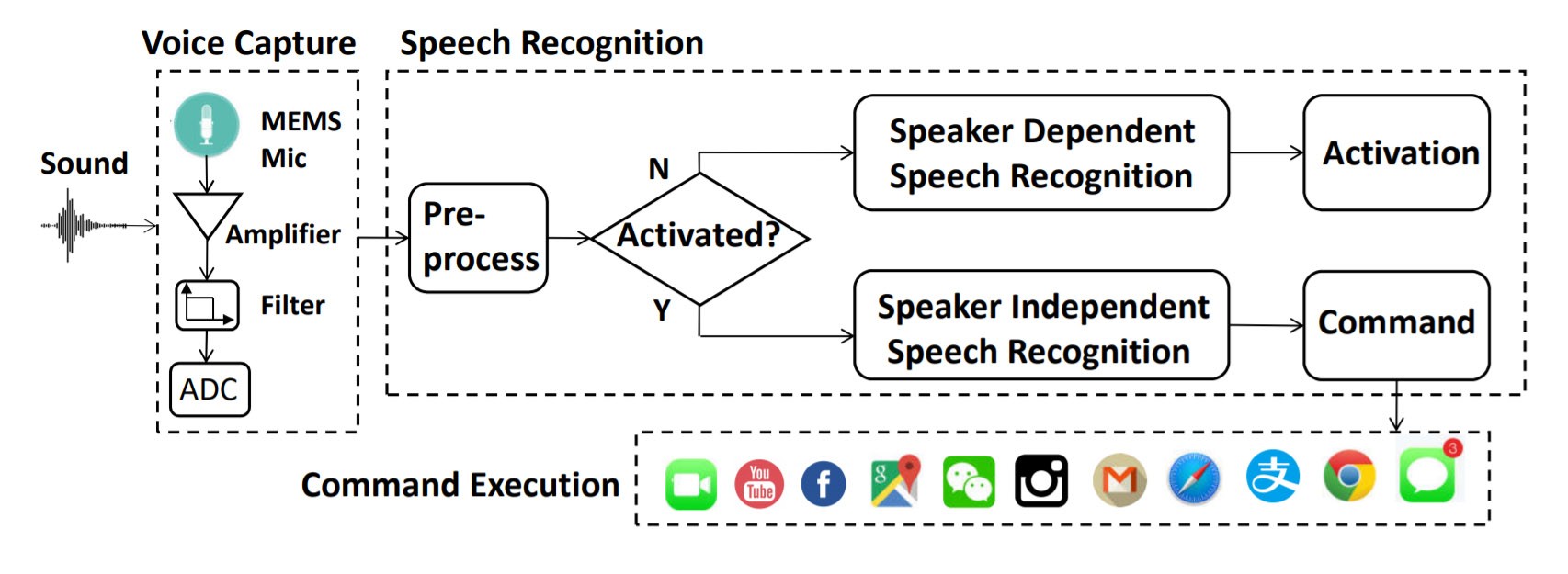

Architecture of state-of-the-art Voice Recognition Systems such as Amazon Echo, Google Now, Siri, etc. An initial command activates the device. Everything spoken after activation is being send into the cloud for further processing. Many systems show a vulnerability in accepting inaudible, ultrasonic commands. Source: DolphinAttack: Inaudible Voice Commands

“Typically, a speech recognition system works in two phases: activation and recognition. During the activation phase, the system cannot accept arbitrary voice inputs, but it waits to be activated. To activate the system, a user has to either say pre-defined wake words or press a special key. For instance, Amazon echo takes “Alexa” as the activation wake word.”, the research paper states.

While Amazons Alexa is using a speaker-independent speech recognition algorithm to recognize the activation command and is therefore usable for anyone around, Apple’s Siri is speaker-dependent and needs to be trained by its owner prior use. This part of the speech recognition is carried out by the device itself. Once activated, the following commands will be recorded and sent to a cloud-based speech recognition for further processing.

Dolphin Attacks: How to silently hack into a device

Before commands can be sent to the device, the activation command has to be spoken out aloud. Since at this stage some systems like Apples Siri uses voice authentication, it is a bit trickier to open the first door. You not just need to say the words, you need the corresponding voice of the owner.

The researchers used different Text-to-Speech Engines (TTS) for a brute-force-like attack. They tried artificial voices from different TTS Engines, hoping to find some sounding similar enough to the owner of a device for the wake word to be accepted. While going through nine different systems with a wide variety of different voice types, they succeeded with almost any engine.

The results show that the control commands from any of the TTS systems can be recognized by the SR system. 35 out of 89 types of activation commands can activate Siri, resulting in a success rate of 39%. Source: DolphinAttack: Inaudible Voice Commands

If the TTS would have shown insufficient, the alternative would have been to secretly record the owners voice for a while and then edit the relevant phonemes to a “Hey Siri”. For example, you could get a “hey” from the phonemes in “he” and “cake”, and the words “city” and “carry” are good enough for a “Siri”.

To make the commands inaudible and allow a secret attack on a device, the research team modulated the so called baseband signal of the voice commands onto an ultrasonic carrier wave. In simple words: They pitched the command so high that a human ear cannot recognize it anymore.

As attack device, the team used two different setups: A stationary system which consisted of a smartphone as signal source, a signal generator as modulator and a wide-band dynamic ultrasonic speaker with the ability to transmit signals ranging between 9 kHz to 50 kHz.

In comparison, they used a portable system made out of a Samsung Galaxy S6 with an amplifier and ultrasonic transducer connected to the phones headphone jack. This setup is limited to signals at frequencies to 23, 25, 33, 40 and 48 kHz.

The Chinese researchers claimed, with that setup they were able to send secret commands to different devices such as iPhones, the Apple Smartwatches, Amazon Echo, several Android Smartphones from Samsung and Huawei as well as to an Audi Q3. They made the devices call certain phone numbers, activate cameras, turned on airplane mode or activated the navigation system.

How realistic are Dolphin Attacks?

While many (not all) smartphones usually need to be unlocked physically to accept voice recognition, it doesn’t look too likely, that a hidden ultrasonic voice command can unlock devices without catching the owner’s attention. To activate the various devices, the research team had to come as close as 25 cm up to 1 meter to the targeted devices, like an Apple Watch or a Smartphone, to be successful. Considering the necessary equipment needed, it seems not very likely that this could happen easily without drawing attention. However, confronted with the paper, Google and Amazon stated they were looking into the findings.

Possible defense mechanisms

To make voice sensitive devices less vulnerable to these so called “Dolphin Attacks”, the research team proposed a few hard- and software based mechanisms: Microphones should be designed to suppress any acoustic signals whose frequencies are in the ultrasound range. With the iPhone 6 Plus this seems already to be the case, since it could withstand inaudible commands very well. Unfortunately not so much the iPhone 7 Plus. For legacy microphones a module should be installed to detect modulated voice commands and then cancel the baseband with these commands prior further processing. Also, software mechanisms are thinkable, since the modulated inaudible voice command, once demodulated, show differences in frequency ranging, which could be detected; thus enabling the rejection of the illegal command.