Cloud vs. Edge: What’s the better choice for my business?

Asked if it’s better to go for a cloud- or rather an edge-based IoT solution, the answer is, as so often in life: It depends. But let’s take a step back first and define what we mean when talking about cloud, edge or fog computing.

When talking about cloud computing we mean by definition an Internet-based computing in which processing power and data storage are centralized and handed over from a client to a central point in a datacenter somewhere. The client itself is merely accessing the cloud, transferring and receiving data. In case of a cloud based IoT solution we usually talk about a cloud platform that collects all kinds of data from sensors and actuators, processes this data, stores it for monitoring and analyzing purposes and controls connected actuators remotely. In short: One center controls it all.

Edge computing is a term originating from the mobile world where data has traditionally been compressed at a point as close as possible to the end user device. The aim being to get it transported quicker through the mobile networks. It’s goal was to ease the burden on the network and accelerate the performance of the whole system. Edge means to perform as much processing as possible at the end – the edge – of a network. Usually on the connected devices themselves. Fog computing is not so far distant from edge, just that the processing power is usually being transferred from a central cloud into the local network architecture; not necessarily on some end user device, but rather a local gateway, router, etc.

Cloud or Edge?

So, what’s better? Should we continue sending all data to the cloud into a central processing unit? There’s enough bandwidth available these days. And the local devices don’t really need to be smart. They can be lightweight, won’t consume much energy and therefore could even run on battery power.

Well, here’s the thing: One central processing unit that stores, analyzes and remotely controls the whole system means that if that one central unit fails or the network gets disconnected, the whole installation fails. A single point of failure is every administrators nightmare. OK, you might argue now that the cloud and the network can be set up redundant, making this argument obsolete. Fair point. How about this though: Sending data into the cloud and waiting for a response takes time. It might be just a few seconds, but in case of triggering a light switch or in cases where reaction in real-time is a matter of life and death as in autonomous traffic or in industrial production, you do not have a few seconds.

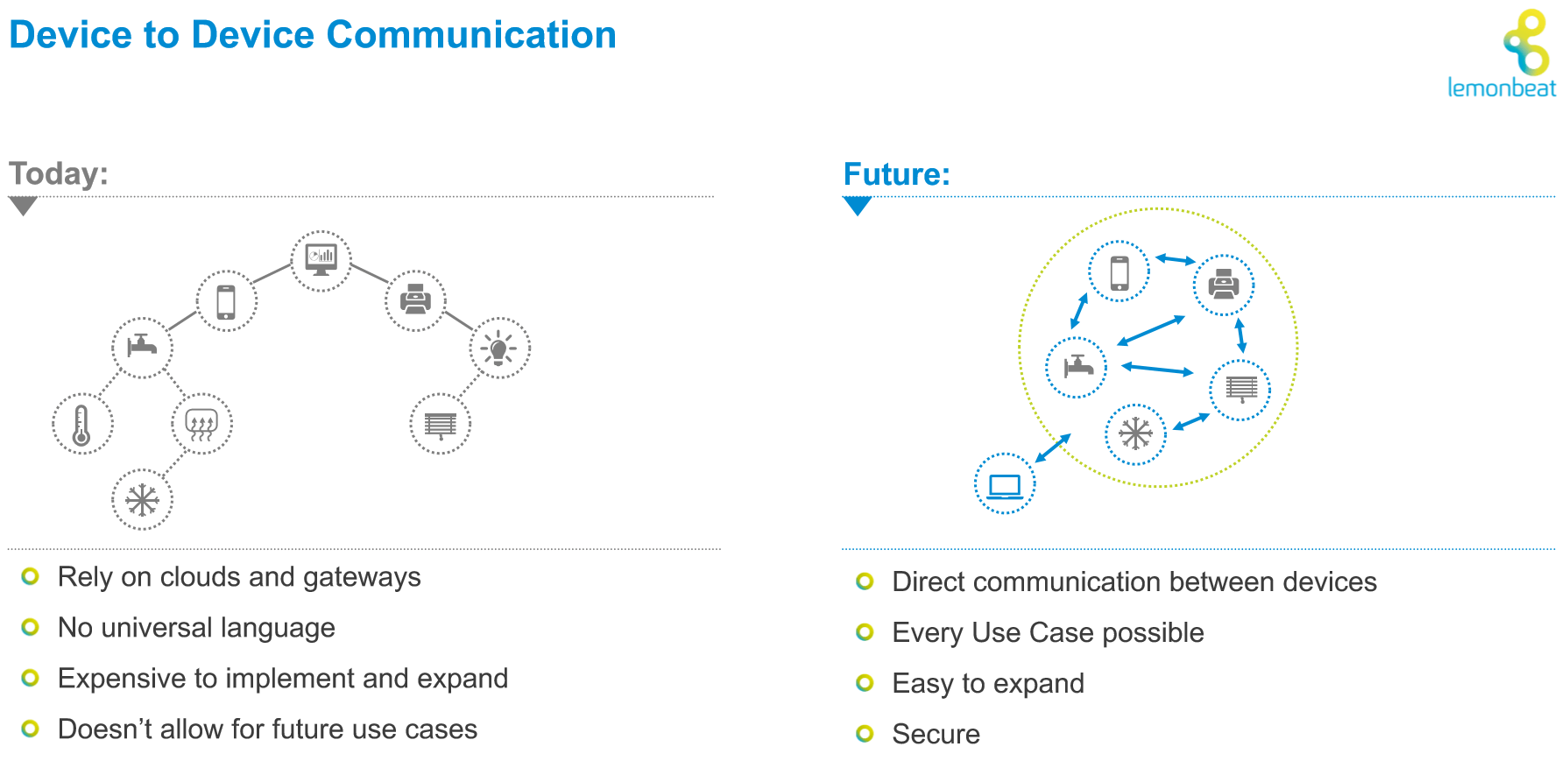

Should you go for edge computing instead then? Even the smallest devices can be equipped with processors these days that won’t consume much energy but are still able to perfom tasks and calculations. These devices could talk directly with each other and therefore are less sensitive or even immune to central server breakdowns or the disruption of an internet connection. Since they don’t need to wait for the cloud, they can react much faster.

But there are limitations: Computing power is still limited on small devices, so more complex algorithms must be performed elsewhere. Either in a nearby “fog” device, a local gateway for example, or back in the cloud. And: If you want to remotely monitor your setup on a global scale or have the data analyzed to increase performance or optimize your production or energy consumption, there’s no way to avoid another server somewhere else.

A mix of both could be the perfect solution

As initially said, there is no single solution to find the perfect match for your business. It depends on your use case. In the end, a mix of both, which is a hybrid system, could be perfect: Let the simple tasks be done directly between the devices. Let them talk to each other, let them do their work as quick and independent as possible. This applies especially in the field of building automation or in setups for the Industry 4.0. Make their simple functionality independent of any centralized device. This will reduce the amount of traffic that goes to the cloud, thus saving costs on bandwidth utilization. Just have the data for analyzing and monitoring sent to the cloud.

Too many protocols hinder the adaptation of IoT globally

The reason why we still tend do everything within the cloud is the heterogeneous IoT landscape. Devices from different vendors won’t necessarily be able to speak with the devices from other vendors. For instance, a KNX speaking device doesn’t know how to talk to a DALI speaking device. That’s why they all talk to a cloud server which then translates and gives the commands. But as mentioned above: Having everything being translated and sent back and forth takes time and makes an installation even more complex and therefore more expensive.

If we really want to boost the Internet of Things, then it’s less a matter of Cloud vs. Edge and more a matter of Cloud with Edge. Last but not the least, the industry really needs to come together and find a way out of this Babylon of languages.